publications

2025

- NeurIPS-SEACommunicating Plans, Not Percepts: Scalable Multi-Agent Coordination with Embodied World ModelsBrennen A. Hill, Mant Koh En Wei, and Thangavel JishnuanandhProceedings of NeurIPS 2025 Workshop on Scaling Environments for Agents

- Also in: NeurIPS 2025 Workshop on Embodied World Models for Decision Making

- Also in: NeurIPS 2025 Workshop on Optimization for Machine Learning

Robust coordination is critical for effective decision-making in multi-agent systems, especially under partial observability. A central question in Multi-Agent Reinforcement Learning (MARL) is whether to engineer communication protocols or learn them end-to-end. We investigate this dichotomy using embodied world models. We propose and compare two communication strategies for a cooperative task-allocation problem. The first, Learned Direct Communication (LDC), learns a protocol end-to-end, with agents generating messages and actions concurrently. The second, Intention Communication, uses an engineered inductive bias: a compact, learned world model, the Imagined Trajectory Generation Module (ITGM), to simulate future states. Agents then communicate a summary of this plan. We evaluate these approaches on goal-directed interaction in a grid world, a canonical abstraction for embodied AI problems. Our experiments reveal that while emergent communication is viable in simple settings, the engineered, world model-based approach shows superior performance, sample efficiency, and scalability as complexity increases. These findings advocate for integrating structured, predictive models into MARL agents to enable active, goal-driven coordination.

@article{marlcomm2025, title = {Communicating Plans, Not Percepts: Scalable Multi-Agent Coordination with Embodied World Models}, author = {Hill, Brennen A. and Wei, Mant Koh En and Jishnuanandh, Thangavel}, journal = {Proceedings of NeurIPS 2025 Workshop on Scaling Environments for Agents}, year = {2025}, also_in = {NeurIPS 2025 Workshop on Embodied World Models for Decision Making; NeurIPS 2025 Workshop on Optimization for Machine Learning}, }

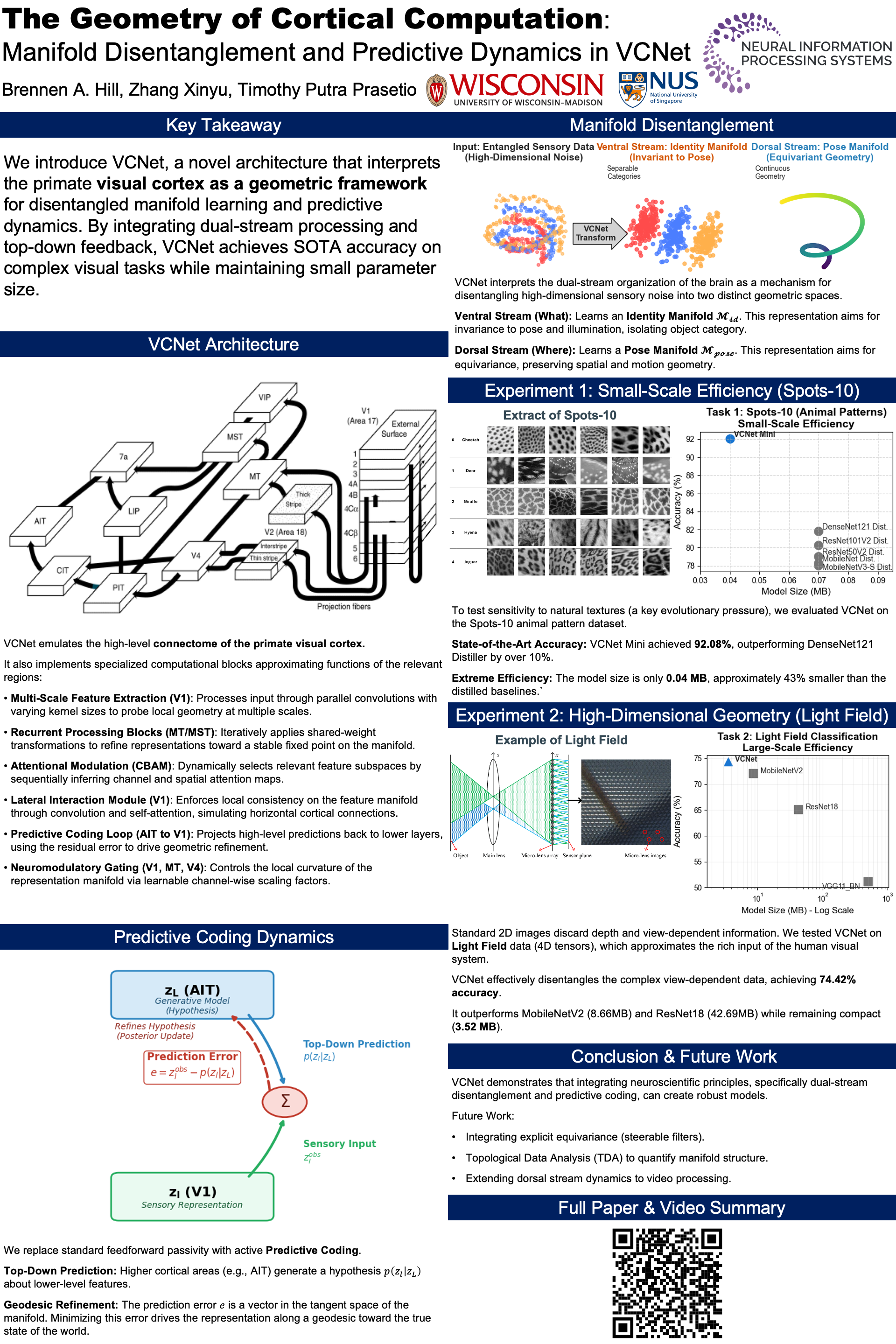

- NeurIPS-NeurRepsThe Geometry of Cortical Computation: Manifold Disentanglement and Predictive Dynamics in VCNetBrennen A. Hill, Zhang Xinyu, and Timothy Putra PrasetioProceedings of NeurIPS 2025 Workshop on Symmetry and Geometry in Neural Representations

- Also in: NeurIPS 2025 Workshop on Interpreting Cognition in Deep Learning Models

Despite their success, modern convolutional neural networks (CNNs) exhibit fundamental limitations, including data inefficiency, poor out-of-distribution generalization, and vulnerability to adversarial perturbations. These shortcomings can be traced to a lack of inductive biases that reflect the inherent geometric structure of the visual world. The primate visual system, in contrast, demonstrates superior efficiency and robustness, suggesting that its architectural and computational principles,which evolved to internalize these structures,may offer a blueprint for more capable artificial vision. This paper introduces Visual Cortex Network (VCNet), a novel neural network architecture whose design is informed by the macro-scale organization of the primate visual cortex. VCNet is framed as a geometric framework that emulates key biological mechanisms, including hierarchical processing across distinct cortical areas, dual-stream information segregation for learning disentangled representations, and top-down predictive feedback for representation refinement. We interpret these mechanisms through the lens of geometry and dynamical systems, positing that they guide the learning of structured, low-dimensional neural manifolds. We evaluate VCNet on two specialized benchmarks: the Spots-10 animal pattern dataset, which probes sensitivity to natural textures, and a light field image classification task, which requires processing higher-dimensional visual data. Our results show that VCNet achieves state-of-the-art accuracy of 92.1% on Spots-10 and 74.4% on the light field dataset, surpassing contemporary models of comparable size. This work demonstrates that integrating high-level neuroscientific principles, viewed through a geometric lens, can lead to more efficient and robust models, providing a promising direction for addressing long-standing challenges in machine learning.

@article{vcnet2025, title = {The Geometry of Cortical Computation: Manifold Disentanglement and Predictive Dynamics in VCNet}, author = {Hill, Brennen A. and Xinyu, Zhang and Prasetio, Timothy Putra}, journal = {Proceedings of NeurIPS 2025 Workshop on Symmetry and Geometry in Neural Representations}, year = {2025}, also_in = {NeurIPS 2025 Workshop on Interpreting Cognition in Deep Learning Models}, }

- NSF BRAIDStructural Plasticity as Active Inference: A Biologically-Inspired Architecture for Homeostatic Control.Brennen A. HillIn National Science Foundation (NSF) workshop on Brain-Inspired Dynamics for Engineering Energy-Efficient Circuits and Artificial Intelligence

Traditional neural networks, while powerful, rely on biologically implausible learning mechanisms such as global backpropagation. This paper introduces the Structurally Adaptive Predictive Inference Network (SAPIN), a novel computational model inspired by the principles of active inference and the morphological plasticity observed in biological neural cultures. SAPIN operates on a 2D grid where processing units, or cells, learn by minimizing local prediction errors. The model features two primary, concurrent learning mechanisms: a local, Hebbian-like synaptic plasticity rule based on the temporal difference between a cell’s actual activation and its learned expectation, and a structural plasticity mechanism where cells physically migrate across the grid to optimize their information-receptive fields. This dual approach allows the network to learn both how to process information (synaptic weights) and also where to position its computational resources (network topology). We validated the SAPIN model on the classic Cart Pole reinforcement learning benchmark. Our results demonstrate that the architecture can successfully solve the CartPole task, achieving robust performance. The network’s intrinsic drive to minimize prediction error and maintain homeostasis was sufficient to discover a stable balancing policy. We also found that while continual learning led to instability, locking the network’s parameters after achieving success resulted in a stable policy. When evaluated for 100 episodes post-locking (repeated over 100 successful agents), the locked networks maintained an average 82% success rate.

@article{sapin2025, title = {Structural Plasticity as Active Inference: A Biologically-Inspired Architecture for Homeostatic Control.}, author = {Hill, Brennen A.}, journal = {In National Science Foundation (NSF) workshop on Brain-Inspired Dynamics for Engineering Energy-Efficient Circuits and Artificial Intelligence}, year = {2025}, }

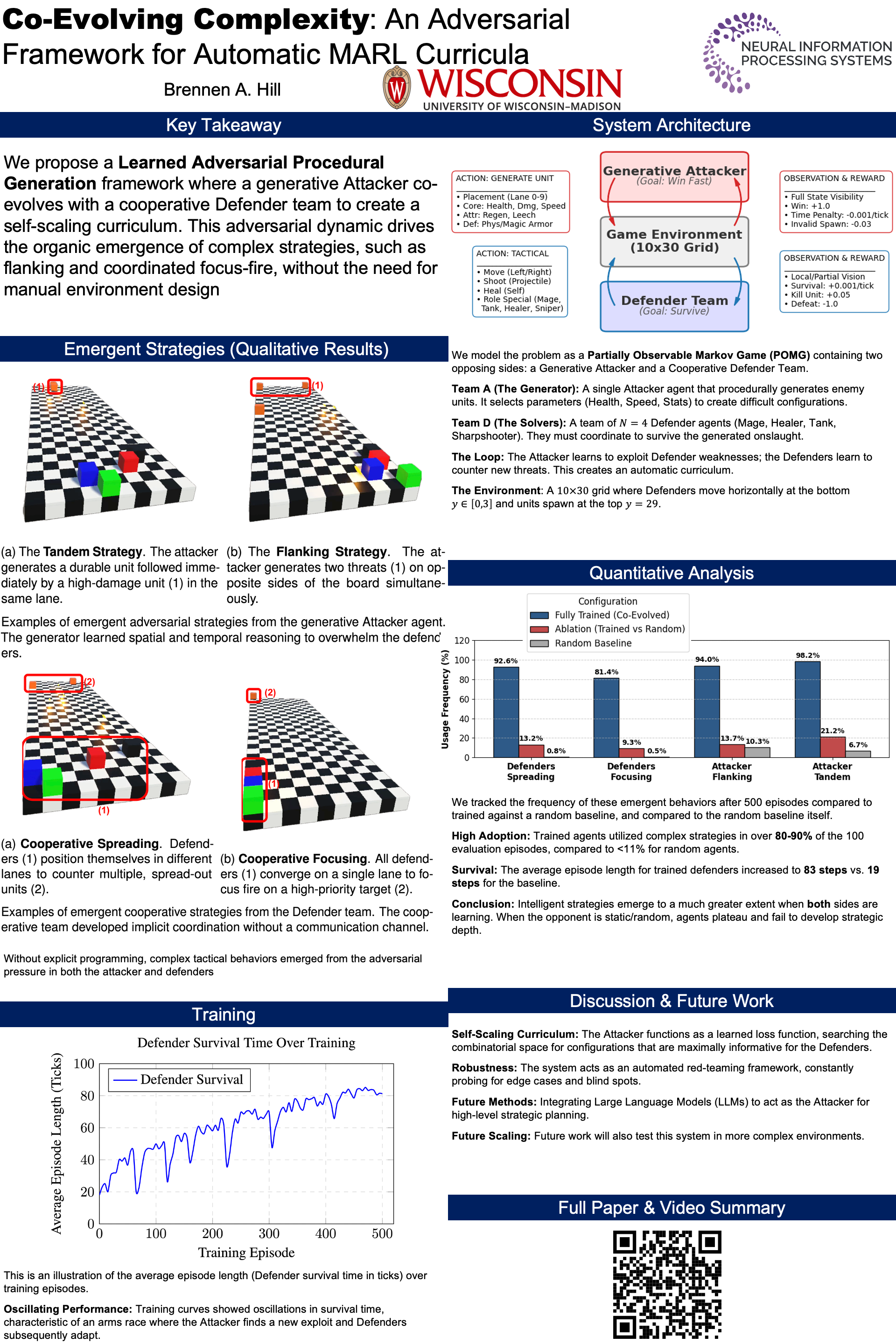

- NeurIPS-SEACo-Evolving Complexity: An Adversarial Framework for Automatic MARL CurriculaBrennen A. HillProceedings of NeurIPS 2025 Workshop on Scaling Environments for Agents

The advancement of general-purpose intelligent agents is intrinsically linked to the environments in which they are trained. While scaling models and datasets has yielded remarkable capabilities, scaling the complexity, diversity, and interactivity of environments remains a crucial bottleneck. Hand-crafted environments are finite and often contain implicit biases, limiting the potential for agents to develop truly generalizable and robust skills. In this work, we propose a paradigm for generating a boundless and adaptive curriculum of challenges by framing the environment generation process as an adversarial game. We introduce a system where a team of cooperative multi-agent defenders learns to survive against a procedurally generative attacker. The attacker agent learns to produce increasingly challenging configurations of enemy units, dynamically creating novel worlds tailored to exploit the defenders’ current weaknesses. Concurrently, the defender team learns cooperative strategies to overcome these generated threats. This co-evolutionary dynamic creates a self-scaling environment where complexity arises organically from the adversarial interaction, providing an effectively infinite stream of novel and relevant training data. We demonstrate that with minimal training, this approach leads to the emergence of complex, intelligent behaviors, such as flanking and shielding by the attacker, and focus-fire and spreading by the defenders. Our findings suggest that adversarial co-evolution is a powerful mechanism for automatically scaling environmental complexity, driving agents towards greater robustness and strategic depth.

@article{adversarialwm2025, title = {Co-Evolving Complexity: An Adversarial Framework for Automatic MARL Curricula}, author = {Hill, Brennen A.}, journal = {Proceedings of NeurIPS 2025 Workshop on Scaling Environments for Agents}, year = {2025}, }

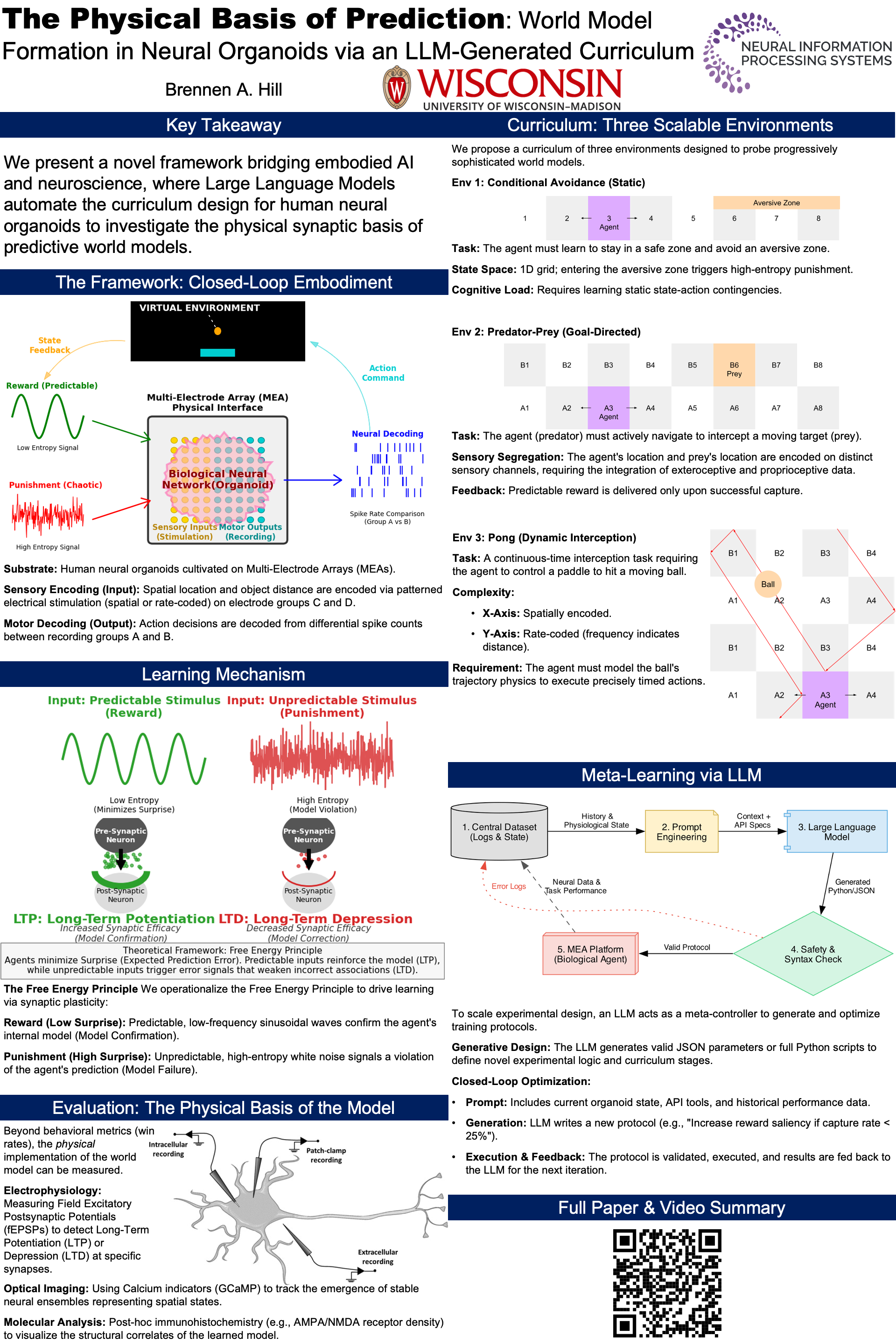

- NeurIPS-SEAThe Physical Basis of Prediction: World Model Formation in Neural Organoids via an LLM-Generated CurriculumBrennen A. HillProceedings of NeurIPS 2025 Workshop on Scaling Environments for Agents

- Also in: NeurIPS 2025 Workshop on Embodied World Models for Decision Making

The capacity of an embodied agent to understand, predict, and interact with its environment is fundamentally contingent on an internal world model. This paper introduces a novel framework for investigating the formation and adaptation of such world models within a biological substrate: human neural organoids. We present a curriculum of three scalable, closed-loop virtual environments designed to train these biological agents and probe the underlying synaptic mechanisms of learning, such as long-term potentiation (LTP) and long-term depression (LTD). We detail the design of three distinct task environments that demand progressively more sophisticated world models for successful decision-making: (1) a conditional avoidance task for learning static state-action contingencies, (2) a one-dimensional predator-prey scenario for goal-directed interaction, and (3) a replication of the classic Pong game for modeling dynamic, continuous-time systems. For each environment, we formalize the state and action spaces, the sensory encoding and motor decoding mechanisms, and the feedback protocols based on predictable (reward) and unpredictable (punishment) stimulation, which serve to drive model refinement. In a significant methodological advance, we propose a meta-learning approach where a Large Language Model automates the generative design and optimization of experimental protocols, thereby scaling the process of environment and curriculum design. Finally, we outline a multi-modal evaluation strategy that moves beyond task performance to directly measure the physical correlates of the learned world model by quantifying synaptic plasticity at electrophysiological, cellular, and molecular levels. This work bridges the gap between model-based reinforcement learning and computational neuroscience, offering a unique platform for studying embodiment, decision-making, and the physical basis of intelligence.

@article{organoid2025, title = {The Physical Basis of Prediction: World Model Formation in Neural Organoids via an LLM-Generated Curriculum}, author = {Hill, Brennen A.}, journal = {Proceedings of NeurIPS 2025 Workshop on Scaling Environments for Agents}, year = {2025}, also_in = {NeurIPS 2025 Workshop on Embodied World Models for Decision Making}, }

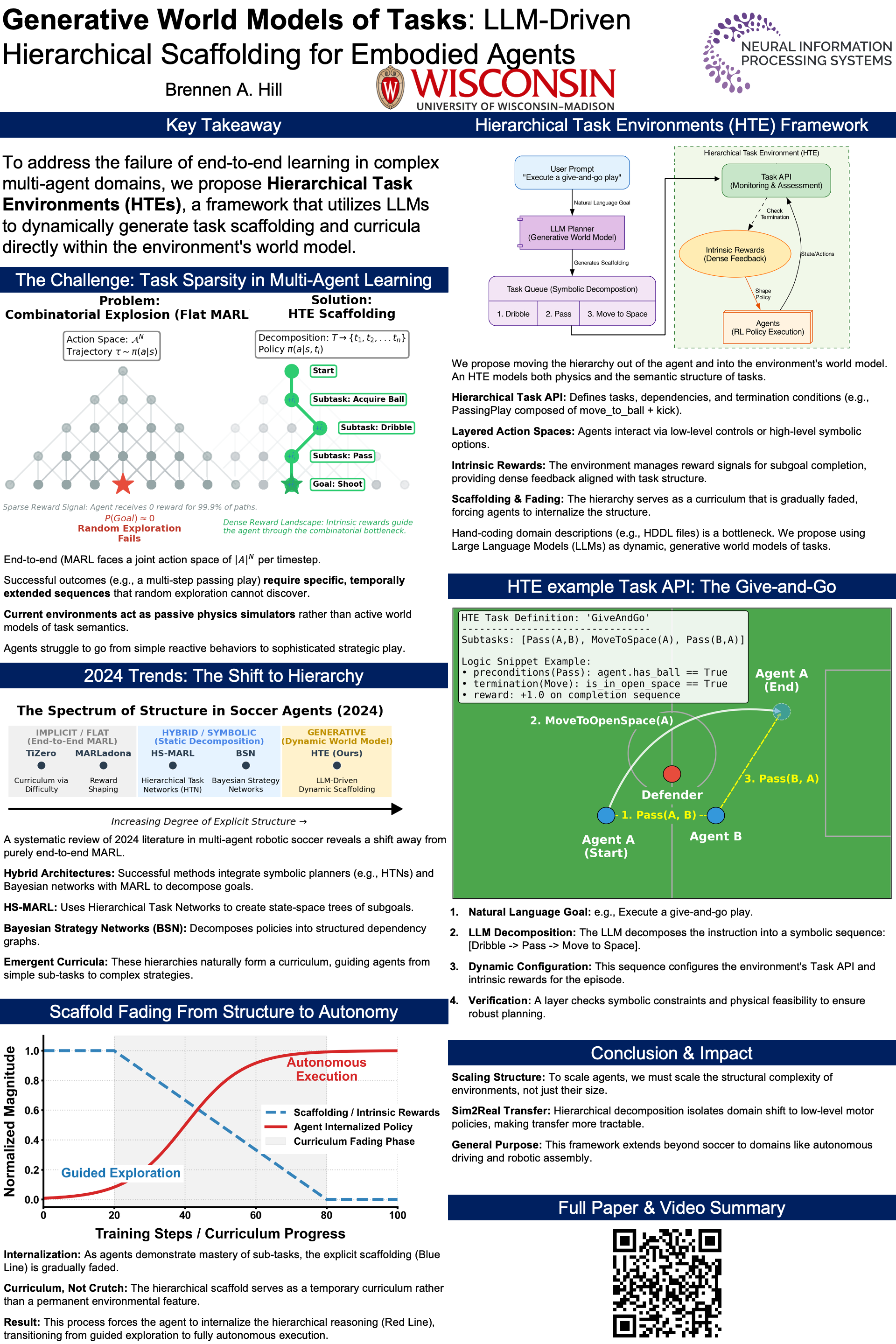

- NeurIPS-EWMGenerative World Models of Tasks: LLM-Driven Hierarchical Scaffolding for Embodied AgentsBrennen A. HillNeurIPS 2025 Workshop on Embodied World Models for Decision Making

Recent advances in agent development have focused on scaling model size and raw interaction data, mirroring the successes seen in large language models. However, for complex, long-horizon multi-agent tasks such as robotic soccer, this end-to-end approach often fails due to intractable exploration spaces and sparse rewards. This position paper argues that the next frontier in developing embodied world models is not merely increasing the fidelity or size of environments, but scaling their structural complexity through explicit hierarchical scaffolding. We posit that an effective world model for decision-making must model not only the world’s physics but also its task semantics. Drawing from a systematic review of 2024 research in low-resource multi-agent soccer, we identify a clear trend towards integrating symbolic and hierarchical methods, such as Hierarchical Task Networks (HTNs) and Bayesian Strategy Networks (BSNs), with multi-agent reinforcement learning (MARL). These methods decompose complex goals into manageable subgoals, creating an intrinsic curriculum that shapes agent learning. We propose that such structured environments are essential for bridging the gap between simple, reactive behaviors and sophisticated, strategic team play. We further extend this principle, proposing that this scaffolding can be generalized to other complex domains and dynamically generated by Large Language Models (LLMs), which act as generative world models of tasks. By building environments with explicit, composable task layers, we can guide agent exploration more efficiently, generate meaningful learning signals, and ultimately train more capable and general-purpose agents with fewer resources than purely end-to-end approaches.

@article{languagedriven2025, title = {Generative World Models of Tasks: LLM-Driven Hierarchical Scaffolding for Embodied Agents}, author = {Hill, Brennen A.}, journal = {NeurIPS 2025 Workshop on Embodied World Models for Decision Making}, year = {2025}, }

- HEFT: A Coarse-to-Fine Hierarchy for Enhancing the Efficiency and Accuracy of Language Model ReasoningBrennen A. HillIn review

The adaptation of large language models (LLMs) to specialized reasoning tasks is fundamentally constrained by computational resources. Parameter-Efficient Fine-Tuning (PEFT) methods have emerged as a powerful solution, yet the landscape of these techniques is diverse, with distinct methods operating in either the model’s weight space or its representation space. This paper investigates the hypothesis that a synergistic combination of these paradigms can unlock superior performance and efficiency. We introduce HEFT (Hierarchical Efficient Fine-Tuning), a novel hierarchical adaptation strategy that composes two distinct PEFT methods in a coarse-to-fine manner: first, a broad, foundational adaptation in the weight space using Low-Rank Adaptation (LoRA), followed by a precise, surgical refinement of internal activations using Representation Fine-Tuning (ReFT). We evaluate this approach by fine-tuning a Llama-2-7B model on the BoolQ benchmark, a challenging dataset for inferential reasoning. Our results reveal a profound synergistic effect. A model fine-tuned for only three epochs with our HEFT strategy achieves an accuracy of 85.17%, exceeding the performance of models trained for 20 epochs with either LoRA-only (85.05%) or ReFT-only (83.36%) methodologies. This work demonstrates that the thoughtful composition of PEFT methods is a potent algorithmic innovation, offering a more efficient and effective path toward advancing the reasoning capabilities of language models. By achieving superior results with a fraction of the computational budget, our findings present a principled approach to overcoming the obstacles inherent in adapting large-scale models for complex cognitive tasks.

@article{heft2025, title = {HEFT: A Coarse-to-Fine Hierarchy for Enhancing the Efficiency and Accuracy of Language Model Reasoning}, author = {Hill, Brennen A.}, journal = {In review}, year = {2025}, } - Breaking to Build: A Threat Model of Prompt-Based Attacks for Securing LLMsBrennen A. Hill, Surendra Parla, Venkata Abhijeeth Balabhadruni, Atharv Prajod Padmalayam, and Sujay Chandra Shekara SharmaIn review

The proliferation of Large Language Models (LLMs) has introduced critical security challenges, where adversarial actors can manipulate input prompts to cause significant harm and circumvent safety alignments. These prompt-based attacks exploit vulnerabilities in a model’s design, training, and contextual understanding, leading to intellectual property theft, misinformation generation, and erosion of user trust. A systematic understanding of these attack vectors is the foundational step toward developing robust countermeasures. This paper presents a comprehensive literature survey of prompt-based attack methodologies, categorizing them to provide a clear threat model. By detailing the mechanisms and impacts of these exploits, this survey aims to inform the research community’s efforts in building the next generation of secure LLMs that are inherently resistant to unauthorized distillation, fine-tuning, and editing.

@article{threatmodel2025, title = {Breaking to Build: A Threat Model of Prompt-Based Attacks for Securing LLMs}, author = {Hill, Brennen A. and Parla, Surendra and Balabhadruni, Venkata Abhijeeth and Padmalayam, Atharv Prajod and Sharma, Sujay Chandra Shekara}, journal = {In review}, year = {2025}, }